APIMyLlama - API up your Ollama Server

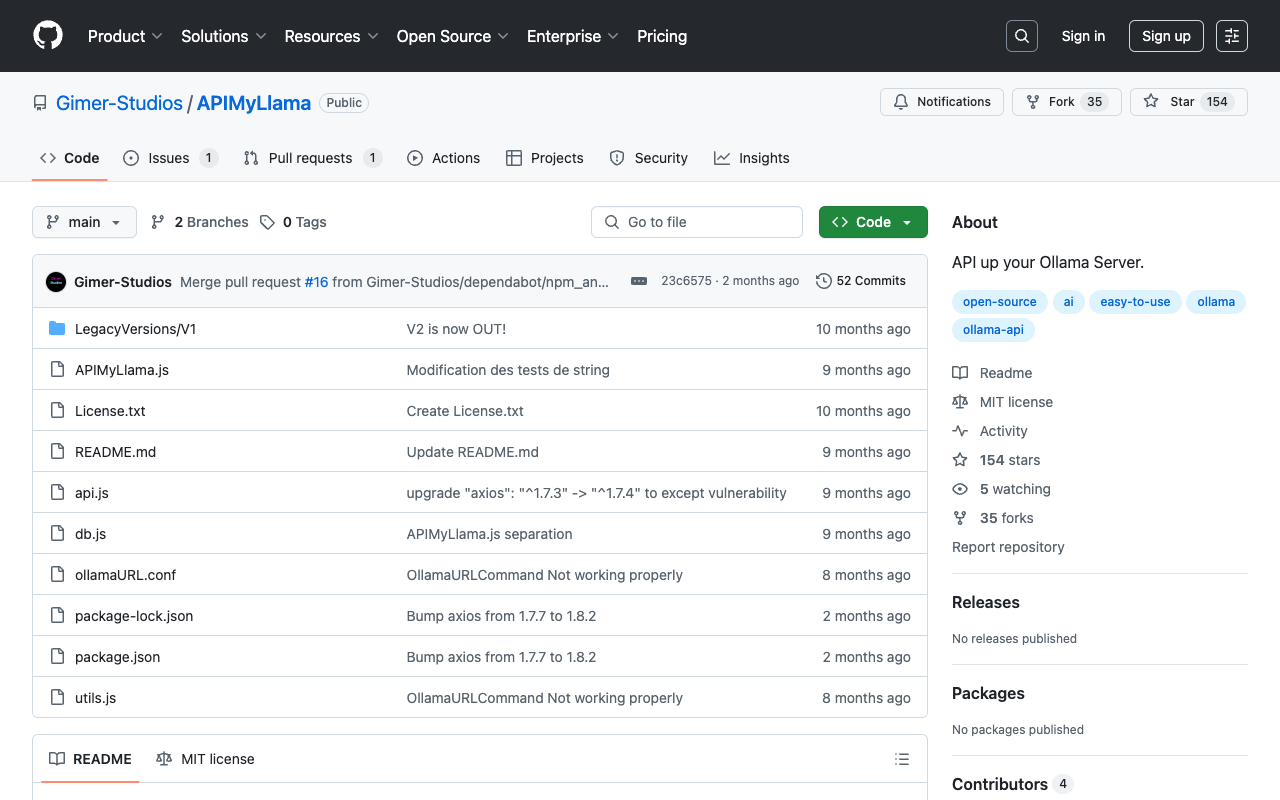

APIMyLlama is a powerful server application developed by Gimer Studios. It provides an interface to interact with the Ollama API, allowing users to easily distribute API keys and leverage the capabilities of Ollama’s AI tool.

To get started with APIMyLlama, you first need to set up Ollama. If you haven’t done so already, install Ollama for your operating system and pull the desired LLM model using the command “ollama pull llama3”. Once the installation is complete, run the command “ollama serve” to start the Ollama server.

Next, you can host the APIMyLlama API on your server. Begin by installing Node.js and cloning the APIMyLlama repository from GitHub. Navigate to the cloned directory and install the necessary dependencies using the command “npm install”. To launch the APIMyLlama application, run the “node APIMyLlama.js” command.

During startup, you will be prompted to enter the port number for the API. Choose a port that is not already in use by Ollama or any other applications running on your server. If you plan to use the API Key system outside of your network, make sure to port forward this port. You will also be asked to provide the port number for your Ollama server, which is typically 11434 by default.

APIMyLlama provides several commands for managing the API keys and configuring the server. These include generating keys, listing keys, removing keys, adding custom keys, changing the server port in real-time, changing the Ollama server port, adding webhooks for alerts, listing webhooks, and deleting webhooks.

If you’re interested in learning more about APIMyLlama and how it can enhance your Ollama server, you can visit the GitHub repository .