KubeAI: Private Open AI on Kubernetes - Serving LLMs Privately

KubeAI is a cutting-edge platform that offers a private OpenAI solution on Kubernetes. It serves OSS LLMs on CPUs or GPUs, providing a seamless drop-in replacement for OpenAI with API compatibility. The platform is designed for scalability, allowing autoscaling from zero and operating without any dependencies like Istio or Knative.

With KubeAI, users can enjoy the convenience of an OpenAI-compatible API while maintaining privacy and control over their LLMs. The platform operates on OSS model servers, including vLLM and Ollama, and even includes a user-friendly Chat UI for enhanced interaction and usability.

For those looking to leverage AI capabilities on Kubernetes while ensuring data privacy and control, KubeAI presents a compelling solution. With features like zero dependencies, autoscaling, and a variety of supported models, KubeAI is a versatile and powerful tool for serving LLMs privately in a seamless and efficient manner.

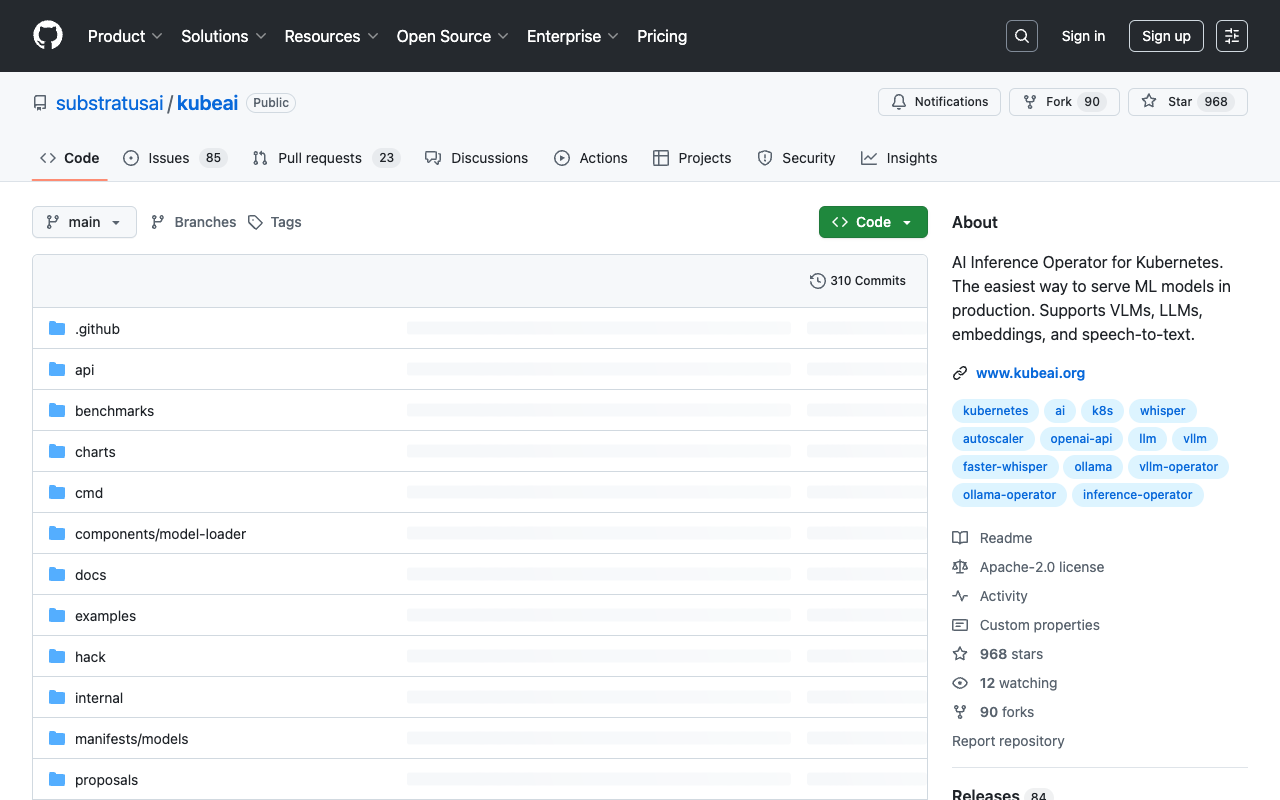

To explore more about KubeAI and its innovative features, visit GitHub - KubeAI .