LLaMa2Lang - Finetune LLaMa2 for any language

LLaMa2Lang is a powerful tool that allows users to finetune LLaMa2-7b, a chat model, for any language other than English. The main objective of LLaMa2Lang is to address the limitations of LLaMa2, which is primarily trained on English data and may not perform optimally for other languages.

By leveraging LLaMa2Lang, users can improve the performance of LLaMa2 for different languages through a simple and convenient process. The tool provides convenience scripts that facilitate the translation of the Open Assistant dataset into the target language. It utilizes Helsinki-NLP’s OPUS translation models to translate the dataset, taking into account language pairs and fallback options.

The finetuning process involves extracting threads from the translated dataset and converting them into texts using LLaMa’s prompt. This enables users to create a language-specific LLaMa2-7b chat model adapter that is finely tuned for their desired language. With LLaMa2Lang, users can unlock the potential of LLaMa2 for multilingual chat applications.

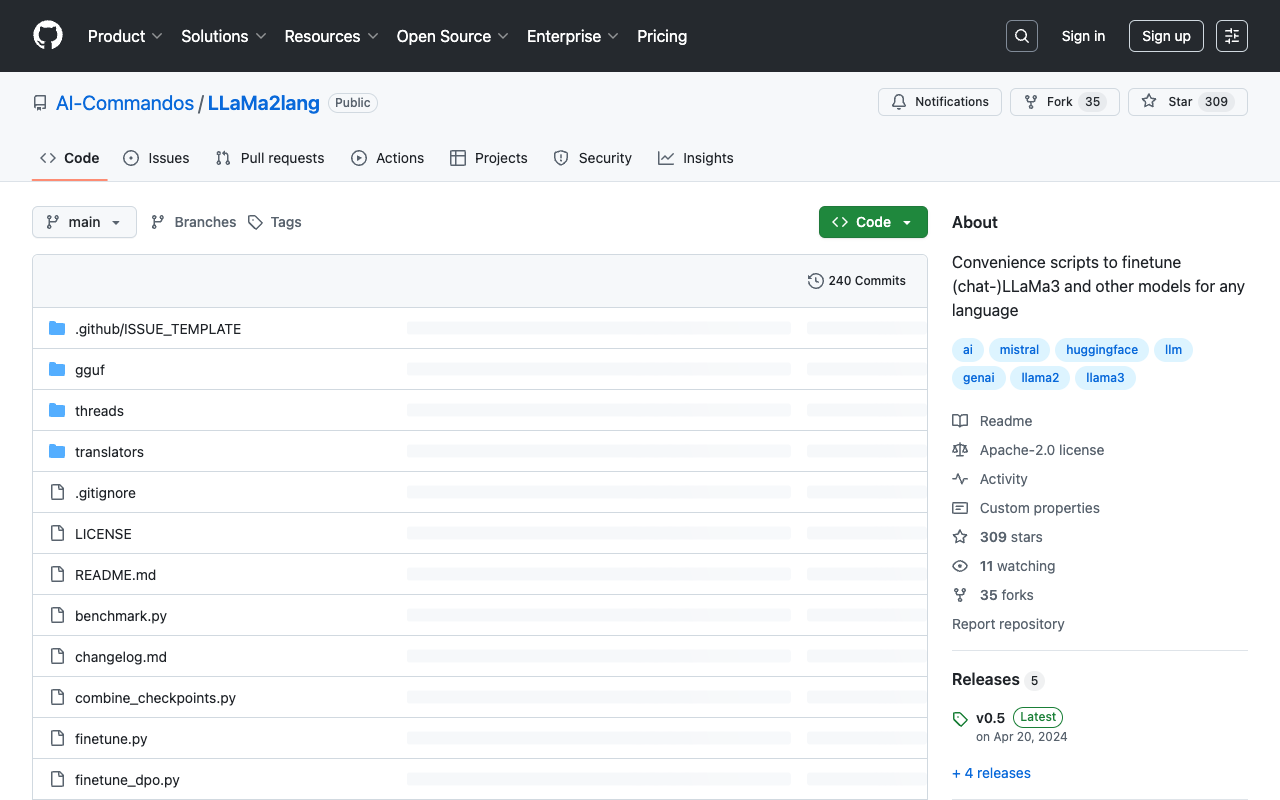

To learn more about LLaMa2Lang and its capabilities, visit the LLaMa2Lang GitHub repository .