Vector Cache - Enhancing LLM Query Performance with Semantic Caching

Vector Cache is a Python library designed to optimize LLM query performance by implementing efficient semantic caching techniques. As the demand for AI applications grows, the library aims to reduce costs and latency associated with large language models by storing and retrieving responses based on semantic similarity.

By leveraging semantic caching, Vector Cache offers faster responses to user queries by minimizing the need for direct LLM requests. This approach not only enhances user experience by providing quicker feedback but also helps in cutting down usage costs associated with extensive LLM usage.

The library provides a streamlined solution for developers looking to improve the efficiency of their AI applications. With its focus on semantic similarity and efficient caching mechanisms, Vector Cache is a valuable tool for enhancing the performance of language models in various domains.

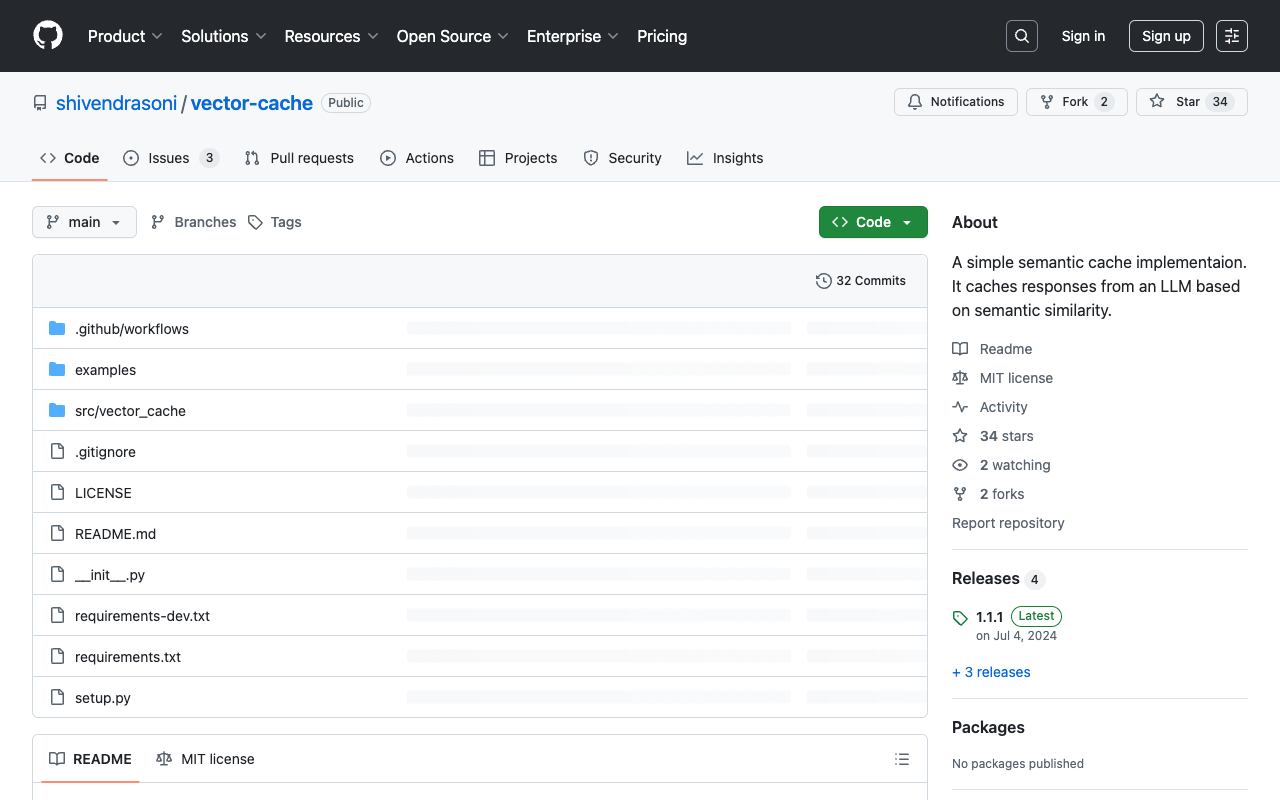

For more information, you can explore Vector Cache on GitHub .